Deep Learning

In a recent blog post (Big Data…Marketing Magic or the Real Deal) we asked whether or not the ‘Big Data’ movement actually meant anything really new for data analytics. Our final conclusion was that there is a real change going on in the tools and techniques that we are using to access and manipulate really large datasets. I left open, however, the question of whether or not as data miners we need to develop new functions and algorithms to analysis all of this fabulous data or if we can simply apply our tried and tested techniques such as Decision Trees, Neural Network, Clustering, Association Analysis, Regressions to these data sources. This still remains an open question, but there have been some really interesting developments in an area known asdeep learning.

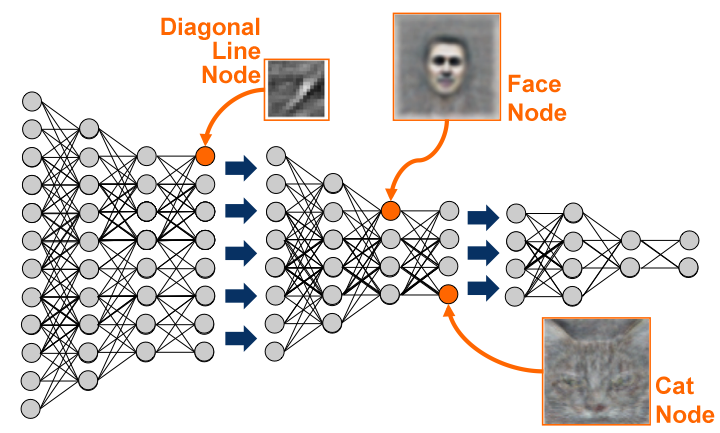

Deep learning refers to a relatively recently developed set of generative machine learning techniques that autonomously generate high-level representations from raw data sources, and using these representations can perform typical machine learning tasks such as classification, regression and clustering. Many of the most important deep learning techniques are extensions of neural network methods and a simple way to understand them is to think of multiple layers of neural networks linked together. Taking raw data input at the first layer the output of the next layer is is a set of high level features which are passed to a further layer which in tune generates a set of higher level features. This continues for a number of layers until eventually output (for example a prediction) is produced.

The image below shows a simplified illustration of this where a stack of neural networks are used to classify images. While the data presented to the network would be raw pixel values, internally the network would generate much higher level features. For example, there might be a node in the network that responds to the presence of diagonal lines in an image or, at an even higher level, to the presence of faces (this is reigniting very interesting old discussions about the idea of the grandmother cell!).

Google have recently been doing a lot of work in this area and have credited the improvements in the voice recognition technologies in their Android phones to the use of deep learning (see here). In another much publicized piece of work, in a collaboration between Google and some US universities, deep learning techniques were used to extract high-level representations of images extracted from Youtube videos (see a New York times article here and the resulting International Conference on Machine Learning paper here). Not surprisingly this system discovered that it was very important to be able to identify cats in Youtube videos!

While the deep learning approach has been shown to work very well for certain applications (and very interestingly many of these applications are based on unlabelled data sets), it is worth mentioning that this came at a pretty high computational cost. From the ICML paper:

“the model has1 billion connections, the dataset has 10 million 200×200 pixel images downloaded from the Internet…We train this network using model parallelism and asynchronous SGD on a cluster with 1,000 machines (16,000 cores) for three days”

Deep learning is a very interesting development in data analytics and there are likely to be more and more examples of deep learning techniques being applied to all kinds of different analytics applications. Maybe the techniques coming out of the deep learning movement will go on to be recognized as be the machine learning equivalents of the ‘Big Data’ tools and techniques that have emerged to access and manipulate really large datasets. www.deeplearning.net is a website well worth keeping an eye on for developments in this area.